Collaborate: gracemann365@gmail.com

Personal Research Github : @davidgracemann

Jewels from chaos: A fascinating journey from abstract forms to physical objects

- Abstract

- Core Hypothesis

- Mission Parameters

- Key Research Imperatives

- Organizational Structure & Capitalization

- Research Mandate: Establishing Predictability

- Strategic Research Vectors

- Flagship Research Project: Epiphany CLI

- Research Infrastructure & Collaborative Framework

- Invitation for Collaboration

Gracemann is a research entity focused on engineering determinism within intrinsically stochastic systems and complex adaptive environments.

Our objective is the principled suppression, bounding, and control of probabilistic variance to achieve verifiably predictable behaviors through algorithmic control frameworks.

We apply this program in a strictly technical manner across quantitative trading systems, self-verifying AGI/software synthesis, and defense contracting–grade autonomous systems, prioritizing reproducible execution, formal verification, and measurable performance guarantees.

By systematically modeling environmental dynamics using multi-agent architectures, we can convert noisy, high-variance decision problems into auditable and repeatable engineering workflows.

Concretely, we target three outcomes:

- Efficiency gains via deterministic synthesis: constrain the space of acceptable solutions (specifications, invariants, typed interfaces) so generation becomes search-and-verify rather than “sample-and-hope.”

- Security hardening through cryptoeconomic stability mechanisms: align incentives so that correct behavior is the dominant strategy under adversarial conditions (including partially faulty agents and corrupted inputs).

- Innovation acceleration by eliminating emergent non-determinism: reduce “unknown unknowns” by enforcing deterministic execution paths, explicit uncertainty accounting, and reproducible evaluation harnesses across agents and environments.

- 3-year foundational initiative (2025-2028) establishing deterministic AI and crypto engineering principles, with an explicit focus on measurement (benchmarks), verification (proofs/checks), and reproducibility (locked configs + deterministic execution).

- $125 Mn capital allocation diversified across nodes, deployed as engineering capacity for the following technical programs:

- Byzantine-tolerant architectures development; build multi-agent consensus layers and failure models that remain correct under adversarial or faulty components.

- Formally verified synthesis research; enforce correctness via specifications, proofs, and mechanically-checkable constraints on generated artifacts.

- Stochasticity mitigation in decentralized systems; bound variance with deterministic controllers, robust estimators, and evaluation protocols that do not drift across runs.

- Variance suppression: Replace probabilistic outputs with fixed-outcome systems wherever the interface demands reliability (e.g., builds, deployments, signing, protocol logic), and isolate stochasticity behind explicitly measured and bounded modules.

- Chaos engineering: Apply control theory to complex adaptive systems by designing feedback loops, stability criteria, and “safe operating envelopes” for agentic systems under distribution shift.

- Deterministic crypto-economics: Integrate game-theoretic validation with AI forecasting so incentive design, security assumptions, and mechanism behavior are testable, adversarially stressable, and repeatable.

This section defines an institutional-grade operating model for Gracemann: clear governance, explicit division mandates, and—most importantly—well-defined technical interfaces between programs so research remains reproducible, reviewable, and composable.

- Entity ID:

GRACEMAN_HOLDINGS_ALPHA. - Legal structure: Privately held conglomerate / family office.

- Incorporation jurisdiction: Multi-jurisdictional (IP holding: Delaware/Ireland; operations: Germany).

- Equity structure: 100% founder controlled (Class F super-voting shares).

- Valuation methodology: Asset-based & IP-future-discounted.

- Operational doctrine: Vertical integration of intelligence, capital, and kinetics.

- Fiscal target: Q4 2028 (operational deployment).

- Single source of truth: Specs, proofs, benchmarks, and runbooks are versioned; no “tribal knowledge” dependencies.

- Deterministic release discipline: Every release is reproducible from pinned environments, with deterministic execution modes and audit logs.

- Safety & dual-use posture: Work is framed as defensive, verification-first engineering; high-risk capabilities are gated behind review, access control, and explicit threat models.

- Interface contracts: Cross-node dependencies require stable schemas, typed APIs, and measurable acceptance tests before integration.

The organization is structured as a closed-loop control system with explicit information flows:

- NODE_01 (Capital/Execution): Produces market infrastructure, data, and execution-grade systems; consumes verified models, forecasts, and constraints.

- NODE_02 (Intelligence/Proof): Produces self-verifying agents, theorem-driven synthesis, and verification tooling; consumes real-world workloads and adversarial test cases.

- NODE_03 (Autonomy/Resilience): Produces defense-grade autonomy research, robotics systems integration patterns, and adversarial resilience testing; consumes verified reasoning modules and simulation harnesses.

- NODE_04 (Operator/Sustainment): Produces human-in-the-loop operating protocols and cognitive reliability workflows; consumes measured failure modes and operational telemetry.

| Node | Code Name | Division type |

|---|---|---|

| NODE_01_QUANT | DAVID_GRACEMANN | Quantitative Finance & Algorithmic Trading |

| NODE_02_AGI | GRACEMAN | Foundational AI Research |

| NODE_03_WAR | GRACEMAN_WAR_LABS | Advanced Defense : Kinetic Hardware & Computational Models |

| NODE_04_BIO | CoOPER_LABS | Human Performance Optimization |

NODE_01_QUANT — Quantitative Finance & Algorithmic Trading

Mandate: Build deterministic, latency-aware trading research and execution systems where strategies are testable, reproducible, and governed by explicit risk constraints.

Technical stack (declared):

- Hardware: FPGA-accelerated NICs (SmartNICs), liquid-cooled execution servers, microwave/RF low-latency transmission arrays.

- Software: C++20 / Rust execution core, kernel-bypass networking (DPDK/Solarflare), proprietary stochastic differential equation (SDE) solvers.

Research vectors:

- Quantum probability in market microstructure.

- Non-ergodic risk modeling.

- Volatility surface arbitrage.

Hardening focus (what “determinism” means here):

- Reproducible research: deterministic backtests, frozen data snapshots, audit-grade experiment manifests.

- Execution integrity: deterministic order-routing logic, explicit failure modes, controlled retries, post-trade verification.

- Risk as code: constraints, limits, and scenario tests treated as versioned artifacts (reviewable and enforceable).

NODE_02_AGI — Foundational AI Research

Mandate: Develop self-verifying AGI and computational mathematics systems that can prove, check, and reproduce their outputs under controlled conditions.

Technical stack (declared):

- Compute: H100/B200 NVLink clusters (4096+ cores), Infiniband, distributed training orchestration (Kubernetes/Slurm).

- Architecture: Neuro-symbolic hybrids, Lean/Coq integration, sparse MoE for inference efficiency.

Research vectors:

- Formal verification of software systems.

- Novel mathematical proof generation.

- Recursive self-improvement protocols.

Hardening focus (what “determinism” means here):

- Proof-carrying outputs: every critical artifact ships with checks (proofs, certificates, invariants, or counterexample traces).

- Evaluation discipline: stable benchmarks, regression gates, and reproducible harnesses across agents and model versions.

- Multi-agent reliability: adversarial collaboration (planner/engineer/reviewer) with consensus checks and explicit disagreement handling.

NODE_03_WAR — Advanced Defense & Kinetic Hardware

Mandate: Research and engineer defense-grade autonomous systems with an emphasis on resilience, verification, simulation-driven validation, and strict safety constraints.

Technical stack (declared):

- Robotics: biomimetic actuators, swarm intelligence protocols, edge-AI perception (on-chip inference).

- Materials science: graphene-composite armor plating, metamaterial signature reduction (RF/thermal), self-healing polymer skins.

Research vectors:

- Bio-hybrid energy scavenging (long-duration operation).

- GPS-denied navigation (SLAM/LIDAR).

- Kinetic risk arbitrage.

Hardening focus (what “determinism” means here):

- Verified autonomy loops: deterministic state estimation updates where possible, explicit uncertainty modeling where not.

- Simulation-to-real discipline: scenario libraries, reproducible sim seeds, and acceptance tests tied to operational envelopes.

- Adversarial resilience: red-team test suites, fault injection, and graceful degradation requirements baked into CI.

NODE_04_BIO — Human Performance Optimization

Mandate: Increase operator reliability and decision quality through measurement-first protocols, fatigue mitigation, and repeatable performance routines.

Technical stack (declared):

- Biotech: CRISPR/Cas9 modulation vectors, peptide synthesis arrays, tDCS rigs.

- Protocols: rapid-induction neuroplasticity states, adrenal stress response dampening, sleep architecture optimization.

Research vectors:

- Nootropic stack pharmacokinetics.

- Adult neurogenesis stimulation.

- Operator fatigue mitigation.

Hardening focus (what “determinism” means here):

- Protocol reproducibility: strict logging, controlled variables, and measurable endpoints for every intervention.

- Operator-in-the-loop safety: “stop conditions,” escalation paths, and conservative defaults for high-stakes operations.

- Long-horizon stability: prioritize maintainability, monitoring, and reversibility over short-term performance spikes.

In operational landscapes with high-dimensional data flows and emergent interdependencies, Gracemann’s mandate is to convert chaotic, partially observable systems into computationally tractable and auditable engineering loops using deterministic system theory.

We do not claim that real-world environments stop being stochastic; instead, we isolate stochasticity behind explicit interfaces (data contracts, uncertainty bounds, and validation gates) and enforce determinism where it matters: execution, verification, replay, and governance. This enables robust autonomous orchestration and decision-making by applying principles from control theory for deterministic systems to design stable feedback loops, measurable constraints, and reproducible system behavior.

We address Distributed Ledger Technology (DLT) volatility by leveraging Large Language Models (LLMs) as structured analysis engines: extracting protocol semantics, forecasting scenario-conditioned outcomes, and generating adversarial test plans that drive deterministic control decisions.

Our work integrates cryptoeconomic principles (incentive alignment, mechanism robustness, game-theoretic validation) with model-assisted pattern recognition to reduce systemic risk and improve predictability in decentralized networks, informed by cryptoeconomic stability research.

Key innovations:

- LLM-augmented forecasting: Training specialized models on DLT transaction patterns to produce scenario libraries and measurable risk signals (not narrative predictions).

- Consensus mechanisms: Designing incentive-compatible protocols with explicit adversary models, failure modes, and testable safety properties.

- Cryptoeconomic resilience: Stress-testing via LLM-generated adversarial scenarios, then converting discovered weaknesses into deterministic guardrails (constraints, slashing conditions, circuit breakers, and rollout policies).

We counter LLM non-determinism by constraining generation into verification-first pipelines: specifications and invariants define the solution space, synthesis proposes candidates, and deterministic checkers (proofs/tests/type constraints) accept or reject artifacts.

| Traditional LLMs | Deterministic Architectures |

|---|---|

| Probabilistic output | Fixed-outcome systems |

| Unauditable paths | Formally verified reasoning chains |

Implementation:

- Synthetic code generation: Producing formally verified software via proof-carrying artifacts, regression gates, and replayable build/run manifests.

- Multi-agent consensus: Eliminating conflicts via Byzantine-tolerant architectures where agents must converge on validated outputs under adversarial disagreement and incomplete information.

Flagship Research Project: Epiphany CLI

Epiphany CLI operationalizes deterministic, multi-agent software engineering by turning stochastic model outputs into constrained actions: every mutation is traced, checked, and made reproducible under a deterministic execution discipline.

Epiphany CLI implements deterministic AI through:

- Deterministic pipelines: Enforcing reproducibility via retry logic (bounded, observable retries with consistent replay semantics).

- Stochasticity mitigation: Eliminating butterfly-effect amplification with algorithmic safeguards (guardrails, rollback points, and adversarial evaluation).

Fusing Deterministic AI with Applied Crypto Engineering By Eliminating Influence of Butterfly effect

This approach establishes reliable infrastructure combining cryptoeconomic governance with deterministic synthesis. Our implementation transcends probabilistic workflows by enforcing deterministic validation gates and consensus-driven review to keep system evolution predictable, even under adversarial inputs and distributed execution.

Our multi-layered architecture hardens end-to-end determinism through formal verification, traceability, and incentive alignment:

-

Graph-Based Codebase Representation: A Typed Property Graph (TPG) in Neo4j serves as the canonical “system map,” enabling dependency queries, change impact analysis, and invariant tracking. This leverages knowledge graph embeddings to encode module semantics and supports correctness workflows through formal program verification.

-

Multi-Agent Cognitive Framework (Spectrum Persona Protocol): A graph-directed orchestration layer coordinates specialized, adversarially-collaborative agents (Engineer/Reviewer/QA). This implements Byzantine Agreement protocols to force convergence on validated artifacts, while incorporating game-theoretic validation as a discipline for incentive-robust decisions.

-

Deterministic Execution & Validation Layer: A strict layer for reproducibility and integrity: Hallucination Probability Scoring (HPS) with Monte Carlo Tree Search for controlled exploration, Abstract Syntax Tree (AST) snapshotting, and atomic rollbacks. All mutations are gated by formal verification (SMT/model checking) and temporal logic compliance for safety-critical workflows.

-

Kernel Implementation in Rust/

C++20: Core subsystems leverage Rust ownership andC++20concepts to achieve deterministic performance, memory safety, and controlled concurrency. This reduces undefined behavior and race-driven nondeterminism using linear type systems and RAII patterns. -

eBPF-based OS Layer Integration (Experimental): An OS-level integration layer uses

eBPFfor kernel-grade observability and sandbox enforcement, enabling real-time system call interception, resource quota enforcement, and container security via LSM integration.

Epiphany CLI represents a shift toward autonomous, verifiable, and governance-aware software engineering—combining theorem proving with distributed consensus to make system behavior repeatable and reviewable at scale.

Our commitment to this mandate is epitomized by Epiphany CLI, a command-line interface engineered for deterministic, multi-agent code reasoning. As an evolution of the Gemini CLI architecture, Epiphany CLI is designed to mitigate the inherent stochasticity of large language models.

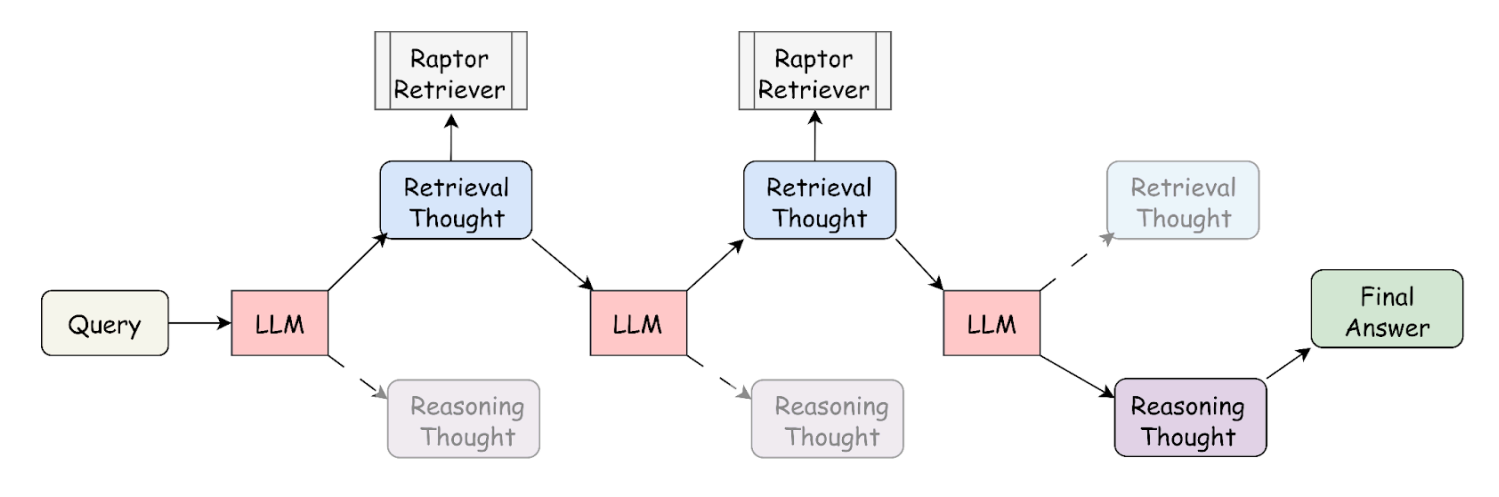

The Graph-Based, Multi-Agent Architecture of Epiphany CLI credits : Pathway Community

Gracemann actively solicits collaboration with researchers, principal engineers, and organizations aligned with our vision of deterministic distributed systems.

Note on attribution and affiliation: The external institutions and references linked below are not collaborators, partners, or affiliated entities; they are cited because their published research and educational material has informed and inspired parts of this work.

This section is written to render cleanly in GitHub-flavored Markdown. [web:111]

- Epiphany CLI repository — Core implementation, specifications, and design artifacts (architecture notes, invariants, and reproducibility primitives).

- Technical Discussions — Open technical dialogue on agent reliability, deterministic evaluation, BFT-style consensus for multi-agent workflows, and verification-first engineering.

We welcome collaborators who can help harden the system across any of the following axes:

- Deterministic execution: reproducible runs, pinned environments, replayable pipelines, and traceable state transitions.

- Formal methods: specs, invariants, proofs, model checking, SMT integration, and proof-carrying artifacts.

- Distributed systems: consensus, fault models, adversarial assumptions, and correctness under partial failure.

- Cryptoeconomics: incentive design, mechanism robustness, adversarial stress testing, and measurable safety properties.

- Multi-agent systems: orchestration, role separation (engineer/reviewer/QA), disagreement protocols, and deterministic adjudication.

To keep the repo “research-grade” and reviewable, we optimize for:

- Verifiable claims: prefer measurable benchmarks, proofs, or test suites over qualitative assertions.

- Deterministic evaluation: any result should be reproducible from documented inputs/configs; no hidden steps.

- Clear interfaces: changes that affect other modules must come with schemas, typed APIs, and migration notes.

- Adversarial thinking: include threat models, failure modes, and negative tests where relevant.

Choose the path that matches your strength:

- Research contributions: formalization of claims, proof sketches → mechanized proofs, benchmark design, failure-mode taxonomy.

- Engineering contributions: determinism hardening (pinning, snapshots, rollback points), CI gates, regression harnesses, performance instrumentation.

- Security contributions: attack surface review, sandboxing strategies, policy suggestions, and responsible disclosure processes.

- This project prioritizes defensive, verification-first engineering and avoids operational guidance for harm.

- If you believe a feature or discussion crosses a safety line, raise it immediately via discussions or a private report route (preferred).

The following are referenced as intellectual inspirations only:

- Santa Fe Institute — Complex adaptive systems, emergence, and network dynamics.

- Chaos Theory Research (UMD Physics) — Nonlinear dynamics, chaos control, and complex systems foundations.

- Formal Methods in distributed systems

- Cryptoeconomic Protocol Design and game-theoretic analysis

- Automated Program Synthesis with formal guarantees

- Byzantine Fault Tolerance in multi-agent architectures

If you want to collaborate (or sanity-check a claim), reach out:

- Email: gracemann365@gmail.com

- GitHub: @davidgracemann