diff --git a/.github/ISSUE_TEMPLATE.md b/.github/ISSUE_TEMPLATE.md

index a180d0516..c17e1dc1c 100644

--- a/.github/ISSUE_TEMPLATE.md

+++ b/.github/ISSUE_TEMPLATE.md

@@ -10,6 +10,7 @@ Have you read GitHub for Unity's Code of Conduct? By filing an Issue, you are ex

- Include the log file in the PR.

- On Windows, the extension log file is at `%LOCALAPPDATA%\GitHubUnity\github-unity.log`

- On macOS, the extension log file is at `~/Library/Logs/GitHubUnity/github-unity.log`

+ - On linux, the extension log file is at `~/.local/share/GitHubUnity/github-unity.log`

### Description

diff --git a/.gitignore b/.gitignore

index 67d4e53bc..7fc38bf13 100644

--- a/.gitignore

+++ b/.gitignore

@@ -7,6 +7,5 @@ _NCrunch_GitHub.Unity

.DS_Store

build/

TestResult.xml

-submodules/

*.stackdump

*.lastcodeanalysissucceeded

\ No newline at end of file

diff --git a/.gitmodules b/.gitmodules

index d9e14a3e9..8ecda521f 100644

--- a/.gitmodules

+++ b/.gitmodules

@@ -1,3 +1,6 @@

[submodule "script"]

path = script

url = git@github.com:github-for-unity/UnityBuildScripts

+[submodule "submodules/packaging"]

+ path = submodules/packaging

+ url = https://github.com/github-for-unity/packaging

diff --git a/GitHub.Unity.sln b/GitHub.Unity.sln

index 0197b47fc..0707a70fa 100644

--- a/GitHub.Unity.sln

+++ b/GitHub.Unity.sln

@@ -5,8 +5,12 @@ VisualStudioVersion = 14.0.25420.1

MinimumVisualStudioVersion = 10.0.40219.1

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "GitHub.Unity", "src\UnityExtension\Assets\Editor\GitHub.Unity\GitHub.Unity.csproj", "{ADD7A18B-DD2A-4C22-A2C1-488964EFF30A}"

EndProject

+Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "GitHub.Unity.45", "src\UnityExtension\Assets\Editor\GitHub.Unity\GitHub.Unity.45.csproj", "{ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}"

+EndProject

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "GitHub.Api", "src\GitHub.Api\GitHub.Api.csproj", "{B389ADAF-62CC-486E-85B4-2D8B078DF763}"

EndProject

+Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "GitHub.Api.45", "src\GitHub.Api\GitHub.Api.45.csproj", "{B389ADAF-62CC-486E-85B4-2D8B078DF76B}"

+EndProject

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "GitHub.Logging", "src\GitHub.Logging\GitHub.Logging.csproj", "{BB6A8EDA-15D8-471B-A6ED-EE551E0B3BA0}"

EndProject

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "CopyLibrariesToDevelopmentFolder", "src\packaging\CopyLibrariesToDevelopmentFolder\CopyLibrariesToDevelopmentFolder.csproj", "{44257C81-EE4A-4817-9AF4-A26C02AA6DD4}"

@@ -31,6 +35,10 @@ Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "TestWebServer", "src\tests\

EndProject

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "UnityTests", "src\UnityExtension\Assets\Editor\UnityTests\UnityTests.csproj", "{462CDBD4-0DDA-4854-1B13-CFDACBFB66F5}"

EndProject

+Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "ExtensionLoader", "src\UnityExtension\Assets\Editor\GitHub.Unity\ExtensionLoader\ExtensionLoader.csproj", "{6B0EAB30-511A-44C1-87FE-D9AB7E34D115}"

+EndProject

+Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "UnityShim", "src\UnityShim\UnityShim.csproj", "{F94F8AE1-C171-4A83-89E8-6557CA91A188}"

+EndProject

Global

GlobalSection(SolutionConfigurationPlatforms) = preSolution

Debug|Any CPU = Debug|Any CPU

@@ -46,6 +54,13 @@ Global

{ADD7A18B-DD2A-4C22-A2C1-488964EFF30A}.dev|Any CPU.Build.0 = dev|Any CPU

{ADD7A18B-DD2A-4C22-A2C1-488964EFF30A}.Release|Any CPU.ActiveCfg = Release|Any CPU

{ADD7A18B-DD2A-4C22-A2C1-488964EFF30A}.Release|Any CPU.Build.0 = Release|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.DebugNoUnity|Any CPU.ActiveCfg = Debug|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.dev|Any CPU.ActiveCfg = dev|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.dev|Any CPU.Build.0 = dev|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {ADD7A18B-DD2A-4C22-A2C1-488964EFF30B}.Release|Any CPU.Build.0 = Release|Any CPU

{B389ADAF-62CC-486E-85B4-2D8B078DF763}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

{B389ADAF-62CC-486E-85B4-2D8B078DF763}.Debug|Any CPU.Build.0 = Debug|Any CPU

{B389ADAF-62CC-486E-85B4-2D8B078DF763}.DebugNoUnity|Any CPU.ActiveCfg = Debug|Any CPU

@@ -54,6 +69,14 @@ Global

{B389ADAF-62CC-486E-85B4-2D8B078DF763}.dev|Any CPU.Build.0 = dev|Any CPU

{B389ADAF-62CC-486E-85B4-2D8B078DF763}.Release|Any CPU.ActiveCfg = Release|Any CPU

{B389ADAF-62CC-486E-85B4-2D8B078DF763}.Release|Any CPU.Build.0 = Release|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.DebugNoUnity|Any CPU.ActiveCfg = Debug|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.DebugNoUnity|Any CPU.Build.0 = Debug|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.dev|Any CPU.ActiveCfg = dev|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.dev|Any CPU.Build.0 = dev|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {B389ADAF-62CC-486E-85B4-2D8B078DF76B}.Release|Any CPU.Build.0 = Release|Any CPU

{BB6A8EDA-15D8-471B-A6ED-EE551E0B3BA0}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

{BB6A8EDA-15D8-471B-A6ED-EE551E0B3BA0}.Debug|Any CPU.Build.0 = Debug|Any CPU

{BB6A8EDA-15D8-471B-A6ED-EE551E0B3BA0}.DebugNoUnity|Any CPU.ActiveCfg = Debug|Any CPU

@@ -132,6 +155,22 @@ Global

{462CDBD4-0DDA-4854-1B13-CFDACBFB66F5}.dev|Any CPU.Build.0 = Debug|Any CPU

{462CDBD4-0DDA-4854-1B13-CFDACBFB66F5}.Release|Any CPU.ActiveCfg = Release|Any CPU

{462CDBD4-0DDA-4854-1B13-CFDACBFB66F5}.Release|Any CPU.Build.0 = Release|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.DebugNoUnity|Any CPU.ActiveCfg = Debug|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.DebugNoUnity|Any CPU.Build.0 = Debug|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.dev|Any CPU.ActiveCfg = dev|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.dev|Any CPU.Build.0 = dev|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {6B0EAB30-511A-44C1-87FE-D9AB7E34D115}.Release|Any CPU.Build.0 = Release|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.Debug|Any CPU.Build.0 = Debug|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.DebugNoUnity|Any CPU.ActiveCfg = Debug|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.DebugNoUnity|Any CPU.Build.0 = Debug|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.dev|Any CPU.ActiveCfg = dev|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.dev|Any CPU.Build.0 = dev|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.Release|Any CPU.ActiveCfg = Release|Any CPU

+ {F94F8AE1-C171-4A83-89E8-6557CA91A188}.Release|Any CPU.Build.0 = Release|Any CPU

EndGlobalSection

GlobalSection(SolutionProperties) = preSolution

HideSolutionNode = FALSE

@@ -147,4 +186,7 @@ Global

{3DD3451C-30FA-4294-A3A9-1E080342F867} = {D17F1B4C-42DC-4E78-BCEF-9F239A084C4D}

{462CDBD4-0DDA-4854-1B13-CFDACBFB66F5} = {D17F1B4C-42DC-4E78-BCEF-9F239A084C4D}

EndGlobalSection

+ GlobalSection(ExtensibilityGlobals) = postSolution

+ SolutionGuid = {66BD4D50-3779-4912-9596-2C838BF24911}

+ EndGlobalSection

EndGlobal

diff --git a/GitHub.Unity.sln.DotSettings b/GitHub.Unity.sln.DotSettings

index 31c5e56f1..2166c735a 100644

--- a/GitHub.Unity.sln.DotSettings

+++ b/GitHub.Unity.sln.DotSettings

@@ -22,8 +22,11 @@

END_OF_LINE

1

1

+ False

+ False

False

True

+ NEVER

False

True

False

@@ -339,8 +342,13 @@

SSH

<Policy Inspect="True" Prefix="" Suffix="" Style="aaBb" />

<Policy Inspect="True" Prefix="" Suffix="" Style="aaBb" />

+ True

+ True

+ True

+ True

True

True

+ True

True

True

True

diff --git a/LICENSE b/LICENSE

index 9f06ebe84..a306528ee 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,6 +1,6 @@

MIT License

-Copyright (c) 2016-2018 GitHub

+Copyright (c) 2016-2019 GitHub

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

diff --git a/README.md b/README.md

index 236bdd910..1cd67b951 100644

--- a/README.md

+++ b/README.md

@@ -1,14 +1,16 @@

# [GitHub for Unity](https://unity.github.com)

-The GitHub for Unity extension brings [Git](https://git-scm.com/) and GitHub into [Unity](https://unity3d.com/), integrating source control into your work with friendly and accessible tools and workflows.

+## NOTICE OF DEPRECATION

-You can reach the team right here by opening a [new issue](https://github.com/github-for-unity/Unity/issues/new), or by joining one of the chats below. You can also email us at unity@github.com, or tweet at [@GitHubUnity](https://twitter.com/GitHubUnity)

+This project is dead y'all! Remove GitHub for Unity from your project, then go to https://github.com/spoiledcat/git-for-unity and install Git for Unity from the instructions there.

-[](https://ci.appveyor.com/project/github-windows/unity)

+# What is it

+

+The GitHub for Unity extension brings [Git](https://git-scm.com/) and GitHub into [Unity](https://unity3d.com/), integrating source control into your work with friendly and accessible tools and workflows.

-[](https://discord.gg/5zH8hVx)

-[](https://www.twitch.tv/sh4na)

+You can reach the team right here by opening a [new issue](https://github.com/github-for-unity/Unity/issues/new). You can also tweet at [@GitHubUnity](https://twitter.com/GitHubUnity)

+[](https://ci.appveyor.com/project/github-windows/unity)

## Notices

@@ -16,169 +18,19 @@ Please refer to the [list of known issues](https://github.com/github-for-unity/U

From version 0.19 onwards, the location of the plugin has moved to `Assets/Plugins/GitHub`. If you have version 0.18 or lower, you need to delete the `Assets/Editor/GitHub` folder before you install newer versions. You should exit Unity and delete the folder from Explorer/Finder, as Unity will not unload native libraries while it's running. Also, remember to update your `.gitignore` file.

-#### Table Of Contents

-

-[Installing GitHub for Unity](#installing-github-for-unity)

- * [Requirements](#requirements)

- * [Git on macOS](#git-on-macos)

- * [Git on Windows](#git-on-windows)

- * [Installation](#installation)

- * [Log files](#log-files)

- * [Windows](#windows)

- * [macOS](#macos)

-

-[Building and Contributing](#building-and-contributing)

-

-[Quick Guide to GitHub for Unity](#quick-guide-to-github-for-unity)

- * [Opening the GitHub window](#opening-the-github-window)

- * [Initialize Repository](#initialize-repository)

- * [Authentication](#authentication)

- * [Publish a new repository](#publish-a-new-repository)

- * [Commiting your work - Changes tab](#commiting-your-work---changes-tab)

- * [Pushing/pulling your work - History tab](#pushingpulling-your-work---history-tab)

- * [Branches tab](#branches-tab)

- * [Settings tab](#settings-tab)

-

-[More Resources](#more-resources)

-

-[License](#license)

-

-## Installing GitHub for Unity

-

-### Requirements

-

-- Unity 5.4 or higher

- - There's currently an blocker issue opened for 5.3 support, so we know it doesn't run there. Personal edition is fine.

-- Git and Git LFS 2.x

-

-#### Git on macOS

-

-The current release has limited macOS support. macOS users will need to install the latest [Git](https://git-scm.com/downloads) and [Git LFS](https://git-lfs.github.com/) manually, and make sure these are on the path. You can configure the Git location in the Settings tab on the GitHub window.

-

-The easiest way of installing git and git lfs is to install [Homebrew](https://brew.sh/) and then do `brew install git git-lfs`.

-

-Make sure a Git user and email address are set in the `~/.gitconfig` file before you initialize a repository for the first time. You can set these values by opening your `~/.gitconfig` file and adding the following section, if it doesn't exist yet:

-

-```

-[user]

- name = Your Name

- email = Your Email

-```

-

-#### Git on Windows

-

-The GitHub for Unity extension ships with a bundle of Git and Git LFS, to ensure that you have the correct version. These will be installed into `%LOCALAPPDATA%\GitHubUnity` when the extension runs for the first time.

-

-Make sure a Git user and email address are set in the `%HOME%\.gitconfig` file before you initialize a repository for the first time. You can set these values by opening your `%HOME%\.gitconfig` file and adding the following section, if it doesn't exist yet:

-

-```

-[user]

- name = Your Name

- email = Your Email

-```

-

-Once the extension is installed, you can open a command line with the same Git and Git LFS version that the extension uses by going to `Window` -> `GitHub Command Line` in Unity.

-

-### Installation

-

-This extensions needs to be installed (and updated) for each Unity project that you want to version control.

-First step is to download the latest package from [the releases page](https://github.com/github-for-unity/Unity/releases);

-it will be saved as a file with the extension `.unitypackage`.

-To install it, open Unity, then open the project you want to version control, and then double click on the downloaded package.

-Alternatively, import the package by clicking Assets, Import Package, Custom Package, then select the downloaded package.

-

-#### Log files

-

-##### macOS

-

-The extension log file can be found at `~/Library/Logs/GitHubUnity/github-unity.log`

-

-##### Windows

-

-The extension log file can be found at `%LOCALAPPDATA%\GitHubUnity\github-unity.log`

-

## Building and Contributing

-The [CONTRIBUTING.md](CONTRIBUTING.md) document will help you get setup and familiar with the source. The [documentation](docs/) folder also contains more resources relevant to the project.

-

Please read the [How to Build](docs/contributing/how-to-build.md) document for information on how to build GitHub for Unity.

-If you're looking for something to work on, check out the [up-for-grabs](https://github.com/github-for-unity/Unity/issues?q=is%3Aopen+is%3Aissue+label%3Aup-for-grabs) label.

-

-

-## I have a problem with GitHub for Unity

-

-First, please search the [open issues](https://github.com/github-for-unity/Unity/issues?q=is%3Aopen)

-and [closed issues](https://github.com/github-for-unity/Unity/issues?q=is%3Aclosed)

-to see if your issue hasn't already been reported (it may also be fixed).

-

-If you can't find an issue that matches what you're seeing, open a [new issue](https://github.com/github-for-unity/Unity/issues/new)

-and fill out the template to provide us with enough information to investigate

-further.

-

-## Quick Guide to GitHub for Unity

-

-### Opening the GitHub window

-

-You can access the GitHub window by going to Windows -> GitHub. The window opens by default next to the Inspector window.

-

-### Initialize Repository

-

-

-

-If the current Unity project is not in a Git repository, the GitHub for Unity extension will offer to initialize the repository for you. This will:

-

-- Initialize a git repository at the Unity project root via `git init`

-- Initialize git-lfs via `git lfs install`

-- Set up a `.gitignore` file at the Unity project root.

-- Set up a `.gitattributes` file at the Unity project root with a large list of known binary filetypes (images, audio, etc) that should be tracked by LFS

-- Configure the project to serialize meta files as text

-- Create an initial commit with the `.gitignore` and `.gitattributes` file.

-

-### Authentication

-

-To set up credentials in Git so you can push and pull, you can sign in to GitHub by going to `Window` -> `GitHub` -> `Account` -> `Sign in`. You only have to sign in successfully once, your credentials will remain on the system for all Git operations in Unity and outside of it. If you've already signed in once but the Account dropdown still says `Sign in`, ignore it, it's a bug.

-

-

-

-### Publish a new repository

-

-1. Go to [github.com](https://github.com) and create a new empty repository - do not add a license, readme or other files during the creation process.

-2. Copy the **https** URL shown in the creation page

-3. In Unity, go to `Windows` -> `GitHub` -> `Settings` and paste the url into the `Remote` textbox.

-3. Click `Save repository`.

-4. Go to the `History` tab and click `Push`.

-

-### Commiting your work - Changes tab

-

-You can see which files have been changed and commit them through the Changes tab. `.meta` files will show up in relation to their files on the tree, so you can select a file for comitting and automatically have their `.meta`

-

-

-

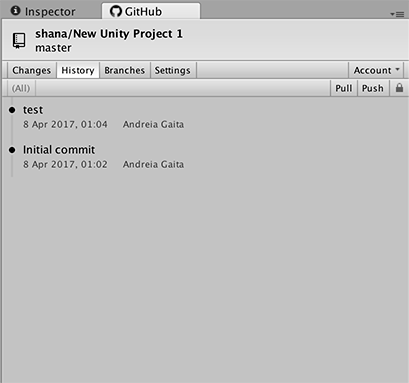

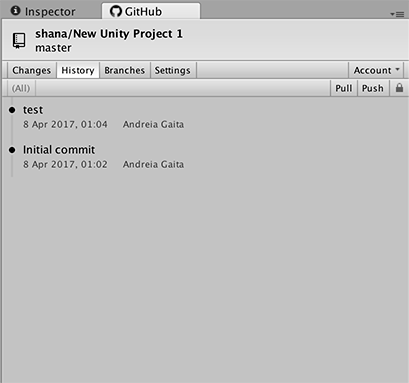

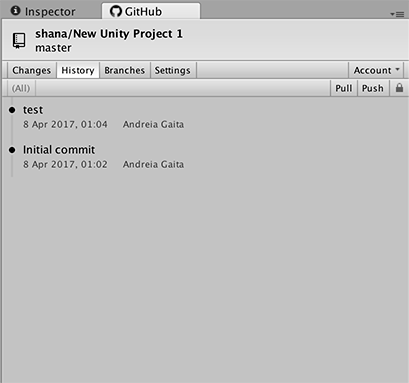

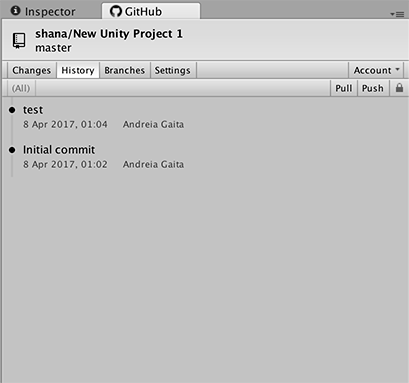

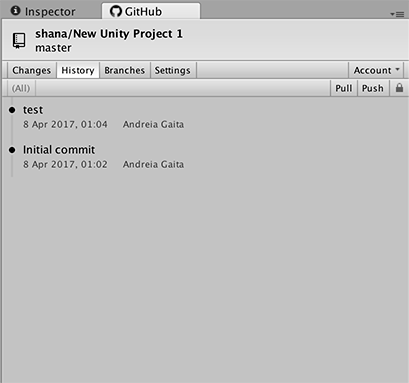

-### Pushing/pulling your work - History tab

-

-The history tab includes a `Push` button to push your work to the server. Make sure you have a remote url configured in the `Settings` tab so that you can push and pull your work.

-

-To receive updates from the server by clicking on the `Pull` button. You cannot pull if you have local changes, so commit your changes before pulling.

-

-

-

-### Branches tab

-

-

-

-### Settings tab

-

-You can configure your user data in the Settings tab, along with the path to the Git installation.

+The [CONTRIBUTING.md](CONTRIBUTING.md) document will help you get setup and familiar with the source. The [documentation](docs/) folder also contains more resources relevant to the project.

-Locked files will appear in a list in the Settings tab. You can see who has locked a file and release file locks after you've pushed your work.

+If you're looking for something to work on, check out the [up-for-grabs](https://github.com/github-for-unity/Unity/issues?q=is%3Aopen+is%3Aissue+label%3Aup-for-grabs) label.

-

+## How to use

-## More Resources

+The [quick guide to GitHub for Unity](docs/using/quick-guide.md)

-See [unity.github.com](https://unity.github.com) for more product-oriented

-information about GitHub for Unity.

+More [in-depth information](docs/readme.md)

## License

@@ -188,6 +40,6 @@ The MIT license grant is not for GitHub's trademarks, which include the logo

designs. GitHub reserves all trademark and copyright rights in and to all

GitHub trademarks. GitHub's logos include, for instance, the stylized

Invertocat designs that include "logo" in the file title in the following

-folder: [IconsAndLogos](https://github.com/github-for-unity/Unity/tree/master/src/UnityExtension/Assets/Editor/GitHub.Unity/IconsAndLogos).

+folder: [IconsAndLogos](src/UnityExtension/Assets/Editor/GitHub.Unity/IconsAndLogos).

Copyright 2015 - 2018 GitHub, Inc.

diff --git a/appveyor.yml b/appveyor.yml

index 3736c7189..bfb3e1b77 100644

--- a/appveyor.yml

+++ b/appveyor.yml

@@ -1,35 +1,28 @@

-version: '{build}.{branch}'

environment:

GHFU_KEY:

secure: KFcQA1VOCEMGUgy2dxH8G5O7C9DsAtQrnc6LakFpd9BRFtNnt2E8RSadPoJwQ9gztWaLS8vQLdU7cV5Ivt01LOnPI2kU1fQd2SHtKwJFve8ppvK/yZ/luhiXvIdGeEAiXQyuc1WUwuECoJVA6n7/uQKr1Q+eHniMitHuFpyQ7OqnwF6f+4TeBS6D78fKd3QoeP4XDdxCjNWPNmLv7BvWFMm5CuTK9aAWhOy8em/nVIED8qt36uHnncsDn+DH7uunj+VwmhVS+4yhuKHHz5naiUAHHIziZ4wBW6Q8rcf7xYEeISjlfxJ6TXs4Wwp406AO+n3v1DZaxSXwvoxplfopeGyb6imJfbwdTU/MHf2uj9wXobR8UhDarcrugVW+J3bqZyvkg20HfSe80gfQUBlK5OdMAp58dhWkddvJSO5TnzqmLlo/60gZxjheIbjdLaavSKcM4xOALXQlBbJJgVQrB/F6tYf7pRK3BlS8VoakyOGjJRzsSNdssSVrLVW3rwANORbH6Z1ZYvQw2ObP6/EBMceer0+JV4y9zB5q9C68erlr1NtJB0xKUp9/7I5GQj4lJ+pDsaFdsj40SyyD4yazSZf/3VIhZi/rQTJm0Ft0ifTZGSxnNTOVMZ5fsoJmUL0bn75Xt9q59cKYzK041HtEzRSElnBeuTf+Sm/MLLV28P1sonwntMhcYQ5ZPIuGmKa8jAJ0kxPXyT56MPJpwbNrbCOw2t9hXg5QbYv/+0RcoRoJ7P/5OY3M6mj8Emtu8N0SFKD6lfv9KNLFAyBuF6Ml7RyOs5RRogIdEapegTY7jwGH7igibrP0lt6HYshM7hKQrRYm2saokBV9TfgV9qnPyGs5/zyTTUGW4y0LavxiXl/vQIpxwCg4liCf1Dgw4Zrxvh40bziKGc3X06RJcysJ8cskOi6gB8eK8VTbAy6/Ufgv+pyjIns1iJxmdVbl8MrlgPXmOephdRPYZJDiFd4ynw0Slm1mqbzPWHdQ/mtMGxNRcysIOPKzKaKvK7Syor5SNtv4HU097dyjVQyW9krHaX/DSnx++dMDCIZJEDYxFw7LmTvl6AFWU4C3HM7+26cHBuBMBYS1PwcRijG+hwsHiXIomVuVglcxp5HC2eFbtBF9h1g030/tCeIBhZIVdSVO78319CsL1+aVtI5WjeQglH1OcTS42OF1Nb/4EjaN4I/w6yRJU5dmK7Q+rHQ+7NnPT4n5flQV6oe1XNbLen0raDuGos6v+aaoOQ50HlCSMMBJ3liapVXIAQ+Z/XM/cNZZa1TB6/C363Hjrts6Uq3IjKXmomhA33je+Wl6mTZqBucXUJs76p+ZgKubWvfzK/e6tORJggAgFNoa9Y56r3t7J8UdUolt301I1cCVz9CvYMUsWTmjKTR5SpssbbcuRcFUhgkHrhsSq0rBef/dfn5VEP8sateiqbpMne5iWO4Wc0Qx2/fJfx2zf9oqO3MihAyYDwPI7JCrmccJY9cHV89YlrytZuh87aBQ8d+T5ELdeG3bbYGYbKJ/yOGxMo8cRSomWUp2809Ea/lgu6WjyY1SdEjh20fwONOTRd/AA3h3XIMU7NY999YOEy1zj3yeP+awozDWQU8GSojA/ceLU5HT0U/RT2XJgkzUFb/u8wanNG6InPqvZy01bLR8JZmqXZl/4XgNpEsbzwPxjzuZJP6O4f2Usq1ZmHUfwlouXLWuHTv5/DPYJ9kO91wjzAH46IsoadmQkRDomHYCDPRCnYoS2zBkmBNukCgCKWsSwD5Msw/tpgIorMi5AAFhIOeWt/7tcKZ5nslbbnmZFtDkOBriPEiOjrAziRqFNAdBjecMrckRQrlkYjkpJG/pXZXYq219/8Sy1/HdNrWTCdq7nc947Fvq41CfumT4c2TqhVc/oflJ9SaxIl2A1Vtbw5LkmQKL08vOitsyZgRupcbqLcSYGo2dG+ks0gK5o2rvNp1nyN3ADh7JFmtD7/og55zlsAj7wP6rLEZ44h+dk7+Sh96WoCPfzSJnchg2vsydTpK6sG3Cp5qjEk0Tps88nX0SPvEPxwEpBL08+XIlg5OVxiTIYI0NSeEjAxwip2ptgaeI4c0yPB5SahMArEw/8YeEflWhiDyjoG4Bw0O1v9fRSSYiFhcYwrkr6yBK81hx6uH6DzqDqtKOxwJ3kKhapfwZXStmeOt4AwiSUHO8TiX1t0i7Jqwl7mRduz3LfmqGCeEsNxnLuhc6MPeEva8LO8ILCEcVz8bHlwUMWqabdZRm9UtbWtZp/u8ffPSBbgNFna2kKFr/F7dmXiv18CpNHOGxb/rSdmIaov1nXJR7XUyKPRO548PHE6iNxuNjWjcuFw1L0IUCNEeVUs7tvNHUTYOXRvfXNm3DbhjFnGix2JVCB2xz6QhDV4Hh6y0/rJl0b2dW25iM5HZdwCBGwgGM+9HyD7r+OBiRn+rd996c81+JsWL4jsa//16uwcbEpsF3tAB7b0by4qHbeZ+Gs3M06Sje4UVpLgKQVHSd/hfo4M70v3APhyz0WFBhLLZyouz0OdazKZ4W+HGBcunAPw/sYdMYZLe4ZmA6B+wxtSzojNKFaCFWoh3S5vLClZTraj7Mhh02PPsY0fmo15ceHBwKjMfGZ0pXt8uiPL29ECUstxSLVnPv6M4uXPJa7k+0lvj7XdB7aJ/LzexPAa/Z1+hsr2sO9An5qPnKM5Tp5zj9Xq2T7WBiDObYLxYZX5ez32jKfSYgv3cpIo5HnhKB3rZL3Alp6iJ2NFsDiB6pIUc2YQ3UU8wiMU90ifA83ORttzRDdLCuH1lYCHPk8rcVqeydgNrI4pRVrdIah3wm6hHc7YjSSnjIOhcl286iVtYgn10RUKxcs//ElgoGm0IkefKRy2WcDDL+10ZifpSWxRu0yrpwlxd0uHCAhrkOEnvaamn+0TSu/6s9VxoUyn9ZJhY7Jgnb6Z9Qxi4C+u2vXf6lOQvzl4AawnD9DW+w2L6hr2njGhvgjj2VLIHM/GIOV/OaYW97AiW0NBuEGDyBiuj8TxIUL7IuVj+QZVfyUzZHHL0c0Hy4jlQ+sh2nFzOAGWVZwEdAvLl9JCCs46iA9DHtBSrHxit7lytyspp7q8TYfE1lA0pIwkx20E3t+4CNdUQAr/IJaZJxhdfKAyW3UipP4LdRbweyYHZYFkoN0gEDMrzE0yB7XFNw5ddm/+o8KIuSUl44UVFcp2j0KPfuXadx7Pz1aa5HKpVUdc5CfJOjqgPJFn/MQU702YdUaV0qD+EHDOiVv313gUHdy9kpieQ3s2LDSh0qBkPdxLAdYXKLP24Mj3V+A2lyHU1WtLrIEVP37eCAFSYPf6Lz6TW4zrEBpHF4nwlE8M+0jQ/oB4lINxnkCa3YKYLFMiZ3dAmqGzVElesgymmB21xvdfrHgB1Z5OtQqYT8PPAw6llujXv6Pj9CqDGGS4U8UeW5GCFi/qyV6+hdg2IUsWtSzkbLJ5n8cfafEYeRBRgzK/B6qlTmoOrRl+bzmjVCJX29P+38KCpu7srnSQ+T0fR6t0OWyHGfC/39iMzATnhpiIXdnngVV9Cypgod5we44C2Rb4Or/nr5mdEidElIIthDiD7GHPNSeMXrdxs+ow76rh42DiY7x0L0SMRWyUEz0seL1JdBCdNn/7LuSn4CVpggqZD8anf9n+IUjrJtqQ+AvaogfuxM65byhGK4iVIijrogfBHb4nGywXxeEKe03JJ8nOWWN2ndyNhMW1dfNGraHvAt7DWL+/tp4qKCA89VFaZjwsqINANF1VVwh96SB6qT4tlKJjaPD3YpawT6Jfs+cg3pMj36FIPzHoNd/r+LwCBZ0WiA5xZiO0DX6WhwTfJVStsz4i9VXElCmWF2dpf5kTEC0T62Y1VCc++M1cTfwX34mdHPvdsm1Vi1qpqz4HTez8ateFukyj1FIN7++eYWoBJBoclhb3y/VUFwepORi84pz1fXUSSl8Fpg2U7NRyj+gcM5v/VAC1FGR4CJVpODIdROF7mCrLTbPzLn8Fv7EJHgHKNeU/sIT13+5V/UJSZPAxWcaUKhRWWuShSVb/1U13LjiWkHvmuH7SVLHbJDO5C5lA589rz4weTMd1OSymPuNB/xj2d2YrJUwqB3olsaxwm8w/bs2ot4GF4HFAdx3l0ESiR8jkBNAvr6vwRcXv+7nfXRpx2Mo5QU2YaunbqZxibmtNCQZBH8ZpQyUZOek4A5qDh6HW2VyJqKXeE8u1fbtOzB9xDYxgTrlVFhCw==

-clone_script:

-- ps: >-

- if(-not $env:appveyor_pull_request_number) {

- git lfs clone -q -n --branch=$env:appveyor_repo_branch https://github.com/$env:appveyor_repo_name.git $env:appveyor_build_folder

- git checkout -qf $env:appveyor_repo_commit

- } else {

- git lfs clone -q -n https://github.com/$env:appveyor_repo_name.git $env:appveyor_build_folder

- git fetch -q origin +refs/pull/$env:appveyor_pull_request_number/merge:

- git lfs fetch origin FETCH_HEAD

- git checkout -qf FETCH_HEAD

- }

-

- Set-Location $env:appveyor_build_folder

+ matrix:

+ - node_version: '8'

install:

- ps: >-

+ $full_build = Test-Path env:GHFU_KEY

+

+ $package = $full_build

+

git submodule sync

git submodule init

- $full_build = Test-Path env:GHFU_KEY

-

if ($full_build) {

+ $env:BUILD_TYPE="full"

$fileContent = "-----BEGIN RSA PRIVATE KEY-----`n"

$fileContent += $env:GHFU_KEY.Replace(' ', "`n")

$fileContent += "`n-----END RSA PRIVATE KEY-----`n"

Set-Content c:\users\appveyor\.ssh\id_rsa $fileContent

+ Install-Product node $env:node_version

} else {

+ $env:BUILD_TYPE="partial"

git submodule deinit script

$destdir = Join-Path $env:appveyor_build_folder 'lib'

$destfile = Join-Path $destdir 'deps.zip'

@@ -43,6 +36,24 @@ install:

nuget restore GitHub.Unity.sln

+ Set-Location $env:appveyor_build_folder

+

+ $version = Get-Content "$($env:appveyor_build_folder)\common\SolutionInfo.cs" | %{ $regex = "const string GitHubForUnityVersion = `"([^`"]*)`""; if ($_ -match $regex) { $matches[1] } }

+

+ $env:package_version="$($version).$($env:APPVEYOR_BUILD_NUMBER)"

+

+ Update-AppveyorBuild -Version $env:package_version

+

+ $message = "Building "

+

+ if ($package) { $message += "and packaging "}

+

+ if ($full_build) { $message += "(full build)" } else { $message += "(partial build)" }

+

+ $message += " version " + $env:package_version + " "

+

+ Write-Host $message

+

assembly_info:

patch: false

file: common\SolutionInfo.cs

@@ -60,10 +71,37 @@ test:

categories:

except:

- DoNotRunOnAppVeyor

-artifacts:

-- path: unity\PackageProject

- type: zip

- name: github-for-unity-packageproject

-- path: build\*.log

-on_failure:

- - ps: Get-ChildItem build\*.log | % { Push-AppveyorArtifact $_.FullName -FileName $_.Name }

+on_success:

+- ps: |

+ if ($package) {

+ $rootdir=$env:appveyor_build_folder

+ Set-Location $rootdir

+ $sourcedir="$rootdir\unity\PackageProject"

+ $packagename="github-for-unity-$($env:package_version)"

+ $packagefile="$rootdir\$($packagename).unitypackage"

+ $commitfile="$sourcedir\commit"

+ $zipfile="$rootdir\PackageProject-$($env:package_version).zip"

+

+ # generate mdb files

+ Write-Output "Generating mdb files"

+ Get-ChildItem -Recurse "$($sourcedir)\*.pdb" | foreach { $_.fullname.substring(0, $_.fullname.length - $_.extension.length) } | foreach { Write-Output "Generating $($_).mdb"; & 'lib\pdb2mdb.exe' "$($_).dll" }

+

+ # generate unitypackage

+ Write-Output "Generating $packagefile"

+ submodules\packaging\unitypackage\run.ps1 -PathToPackage:$sourcedir -OutputFolder:$rootdir -PackageName:$packagename

+

+ # save commit

+ Add-Content $commitfile $appveyor_repo_commit

+

+ Write-Output "Zipping $sourcedir to $zipfile"

+ 7z a $zipfile $sourcedir

+

+ Write-Output "Uploading $zipfile"

+ Push-AppveyorArtifact $zipfile -DeploymentName source

+ Push-AppveyorArtifact $packagefile -DeploymentName package

+ Push-AppveyorArtifact "$($packagefile).md5" -DeploymentName package

+ }

+on_finish:

+- ps: |

+ Set-Location $env:appveyor_build_folder

+ Get-ChildItem $env:appveyor_build_folder\build\*.log | % { Push-AppveyorArtifact $_.FullName -FileName $_.Name -DeploymentName logs }

diff --git a/common/SolutionInfo.cs b/common/SolutionInfo.cs

index 62edf1036..eb95db6a0 100644

--- a/common/SolutionInfo.cs

+++ b/common/SolutionInfo.cs

@@ -11,7 +11,7 @@

[assembly: AssemblyInformationalVersion(System.AssemblyVersionInformation.Version)]

[assembly: ComVisible(false)]

[assembly: AssemblyCompany("GitHub, Inc.")]

-[assembly: AssemblyCopyright("Copyright GitHub, Inc. 2017-2018")]

+[assembly: AssemblyCopyright("Copyright GitHub, Inc. 2016-2019")]

[assembly: AssemblyConfiguration("")]

[assembly: AssemblyTrademark("")]

[assembly: AssemblyCulture("")]

@@ -31,9 +31,10 @@

namespace System

{

internal static class AssemblyVersionInformation {

- // this is for the AssemblyVersion and AssemblyVersion attributes, which can't handle alphanumerics

- internal const string VersionForAssembly = "1.0.0";

- // Actual real version

- internal const string Version = "1.0.0rc5";

+ private const string GitHubForUnityVersion = "1.4.0";

+ internal const string VersionForAssembly = GitHubForUnityVersion;

+

+ // If this is an alpha, beta or other pre-release, mark it as such as shown below

+ internal const string Version = GitHubForUnityVersion; // GitHubForUnityVersion + "-beta1"

}

}

diff --git a/common/packaging.targets b/common/packaging.targets

index 77f866e20..a5ddd41a5 100644

--- a/common/packaging.targets

+++ b/common/packaging.targets

@@ -7,8 +7,9 @@

@@ -22,16 +23,16 @@

+ Condition="!$([System.String]::Copy('%(Filename)').Contains('deleteme')) and !$([System.String]::Copy('%(Extension)').Contains('xml'))" />

-

+

-

+

diff --git a/common/properties.props b/common/properties.props

index 977f217b3..8316329ed 100644

--- a/common/properties.props

+++ b/common/properties.props

@@ -3,7 +3,7 @@

Internal

- ENABLE_METRICS

+ ENABLE_METRICS

$(BuildDefs);ENABLE_MONO

$(SolutionDir)script\lib\

diff --git a/create-octorun-zip.sh b/create-octorun-zip.sh

new file mode 100755

index 000000000..4eb568d33

--- /dev/null

+++ b/create-octorun-zip.sh

@@ -0,0 +1,3 @@

+#!/bin/sh -eu

+DIR=$(pwd)

+submodules/packaging/octorun/run.sh --path $DIR/octorun --out $DIR/src/GitHub.Api/Resources --source $DIR/src/GitHub.Api/Installer

diff --git a/create-unitypackage.sh b/create-unitypackage.sh

new file mode 100755

index 000000000..333f7cb90

--- /dev/null

+++ b/create-unitypackage.sh

@@ -0,0 +1,9 @@

+#!/bin/sh -eu

+DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )"

+

+version=$(sed -En 's,.*GitHubForUnityVersion = "(.*)".*,\1,p' common/SolutionInfo.cs)

+commitcount=$(git rev-list --count HEAD)

+commit=$(git log -n1 --pretty=format:%h)

+version="${version}.${commitcount}-${commit}"

+

+$DIR/submodules/packaging/unitypackage/run.sh --path $DIR/unity/PackageProject --out $DIR --file github-for-unity-$version

diff --git a/docs/contributing/how-to-build.md b/docs/contributing/how-to-build.md

index db494a224..13ef8c2fa 100644

--- a/docs/contributing/how-to-build.md

+++ b/docs/contributing/how-to-build.md

@@ -13,7 +13,7 @@ This repository is LFS-enabled. To clone it, you should use a git client that su

### MacOS

-- Mono 4.x required.

+- [Mono 4.x](https://download.mono-project.com/archive/4.8.1/macos-10-universal/) required. You can install it via brew with `brew tap shana/mono && brew install mono@4.8`

- Mono 5.x will not work

- `UnityEngine.dll` and `UnityEditor.dll`.

- If you've installed Unity in the default location of `/Applications/Unity`, the build will be able to reference these DLLs automatically. Otherwise, you'll need to copy these DLLs from `[Unity installation path]/Unity.app/Contents/Managed` into the `lib` directory in order for the build to work

@@ -35,12 +35,25 @@ git submodule deinit script

### Important pre-build steps

-To be able to authenticate in GitHub for Unity, you'll need to:

+The build needs to reference `UnityEngine.dll` and `UnityEditor.dll`. These DLLs are included with Unity. If you've installed Unity in the default location, the build will be able to find them automatically. If not, copy these DLLs from `[your Unity installation path]\Unity\Editor\Data\Managed` into the `lib` directory in order for the build to work.

+

+#### Developer OAuth app

+

+Because GitHub for Unity uses OAuth web application flow to interact with the GitHub API and perform actions on behalf of a user, it needs to be bundled with a Client ID and Secret.

+

+For external contributors, we have bundled a developer OAuth application in the source so that you can complete the sign in flow locally without needing to configure your own application.

+

+These are listed in `src/GitHub.Api/Application/ApplicationInfo.cs`

+

+DO NOT TRUST THIS CLIENT ID AND SECRET! THIS IS ONLY FOR TESTING PURPOSES!!

+

+The limitation with this developer application is that this will not work with GitHub Enterprise. You will see sign-in will fail on the OAuth callback due to the credentials not being present there.

+

+To provide your own Client ID and Client Secret:

- [Register a new developer application](https://github.com/settings/developers) in your profile.

- Copy [common/ApplicationInfo_Local.cs-example](../../common/ApplicationInfo_Local.cs-example) to `common/ApplicationInfo_Local.cs` and fill out the clientId/clientSecret fields for your application.

-The build needs to reference `UnityEngine.dll` and `UnityEditor.dll`. These DLLs are included with Unity. If you've installed Unity in the default location, the build will be able to find them automatically. If not, copy these DLLs from `[your Unity installation path]\Unity\Editor\Data\Managed` into the `lib` directory in order for the build to work.

### Visual Studio

@@ -56,13 +69,12 @@ Once you've built the solution for the first time, you can open `src/UnityExtens

The build also creates a Unity test project called `GitHubExtension` inside a directory called `github-unity-test` next to your local clone. For instance, if the repository is located at `c:\Projects\Unity` the test project will be at `c:\Projects\github-unity-test\GitHubExtension`. You can use this project to test binary builds of the extension in a clean environment (all needed DLLs will be copied to it every time you build).

-Note: some files might be locked by Unity if have one of the build output projects open when you compile from VS or the command line. This is expected and shouldn't cause issues with your builds.

+Note: some files might be locked by Unity if have one of the build output projects open when you compile from VS or the command line. This is expected and shouldn't cause issues with your builds.

## Solution organization

The `GitHub.Unity.sln` solution includes several projects:

-- dotnet-httpclient35 and octokit: external dependencies for threading and github api support, respectively. These are the submodules.

- packaging: empty projects with build rules that copy DLLs to various locations for testing

- Tests: unit and integration test projects

- GitHub.Logging: A logging helper library

diff --git a/docs/readme.md b/docs/readme.md

index ae4be69a9..d73e8e840 100644

--- a/docs/readme.md

+++ b/docs/readme.md

@@ -30,6 +30,8 @@ Details about how the team is organizing and shipping GitHub for Unity:

## Using

+[Quick Guide](using/quick-guide.md)

+

These documents contain more details on how to use the GitHub for Unity plugin:

-- **[Installing and Updating the GitHub for Unity package](https://github.com/github-for-unity/Unity/blob/master/docs/using/how-to-install-and-update.md)**

-- **[Getting Started with the GitHub for Unity package](https://github.com/github-for-unity/Unity/blob/master/docs/using/getting-started.md)**

+- **[Installing and Updating the GitHub for Unity package](using/how-to-install-and-update.md)**

+- **[Getting Started with the GitHub for Unity package](using/getting-started.md)**

diff --git a/docs/using/authenticating-to-github.md b/docs/using/authenticating-to-github.md

new file mode 100644

index 000000000..203a3473d

--- /dev/null

+++ b/docs/using/authenticating-to-github.md

@@ -0,0 +1,37 @@

+# Authenticating to GitHub

+

+## How to sign in to GitHub

+

+1. Open the **GitHub** window by going to the top level **Window** menu and selecting **GitHub**, as shown below.

+

+  +

+1. Click the **Sign in** button at the top right of the window.

+

+

+

+1. Click the **Sign in** button at the top right of the window.

+

+  +

+1. In the **Authenticate** dialog, enter your username or email and password

+

+

+

+1. In the **Authenticate** dialog, enter your username or email and password

+

+  +

+ If your account requires Two Factor Authentication, you will be prompted for your auth code.

+

+

+

+ If your account requires Two Factor Authentication, you will be prompted for your auth code.

+

+  +

+You will need to create a GitHub account before you can sign in, if you don't have one already.

+

+- For more information on creating a GitHub account, see "[Signing up for a new GitHub account](https://help.github.com/articles/signing-up-for-a-new-github-account/)".

+

+### Personal access tokens

+

+If the sign in operation above fails, you can manually create a personal access token and use it as your password.

+

+The scopes for the personal access token are: `user`, `repo`.

+- *user* scope: Grants access to the user profile data. We currently use this to display your avatar and check whether your plans lets you publish private repositories.

+- *repo* scope: Grants read/write access to code, commit statuses, invitations, collaborators, adding team memberships, and deployment statuses for public and private repositories and organizations. This is needed for all git network operations (push, pull, fetch), and for getting information about the repository you're currently working on.

+

+***Note:*** *Some older versions of the plugin ask for `gist` and `write:public_key`.*

+

+For more information on creating personal access tokens, see "[Creating a personal access token for the command line](https://help.github.com/articles/creating-a-personal-access-token-for-the-command-line).

+

+For more information on authenticating with SAML single sign-on, see "[About authentication with SAML single sign-on](https://help.github.com/articles/about-authentication-with-saml-single-sign-on)."

diff --git a/docs/using/getting-started.md b/docs/using/getting-started.md

index 11f2b008f..4c5892a96 100644

--- a/docs/using/getting-started.md

+++ b/docs/using/getting-started.md

@@ -19,8 +19,10 @@ And you should see the GitHub spinner:

- History: A history of commits with title, time stamp, and commit author

- Branches: A list of local and remote branches with the ability to create new branches, switch branches, or checkout remote branches

- Settings: your git configuration (pulled from your local git credentials if they have been previously set), your repository configuration (you can manually put the URL to any remote repository here instead of using the Publish button to publish to GitHub), a list of locked files, your git installation details, and general settings to help us better help you if you get stuck

-4. You can

-# Connecting to an Existing Repository

-

-# Connecting to an Existing Repository that already has the GitHub for Unity package

+# Cloning an Existing Repository

+GitHub for Unity does not have the functionality to clone projects (yet!).

+1. Clone the repository (either through command line or with GitHub Desktop https://desktop.github.com/).

+2. Open the project in Unity.

+3. Install GitHub for Unity if it is not already installed.

+4. The GitHub plugin should load with all functionality enabled.

\ No newline at end of file

diff --git a/docs/using/how-to-install-and-update.md b/docs/using/how-to-install-and-update.md

index 5f357cf79..fb9dd93d8 100644

--- a/docs/using/how-to-install-and-update.md

+++ b/docs/using/how-to-install-and-update.md

@@ -43,4 +43,9 @@ Once you've downloaded the package file, you can quickly install it within Unity

+

+You will need to create a GitHub account before you can sign in, if you don't have one already.

+

+- For more information on creating a GitHub account, see "[Signing up for a new GitHub account](https://help.github.com/articles/signing-up-for-a-new-github-account/)".

+

+### Personal access tokens

+

+If the sign in operation above fails, you can manually create a personal access token and use it as your password.

+

+The scopes for the personal access token are: `user`, `repo`.

+- *user* scope: Grants access to the user profile data. We currently use this to display your avatar and check whether your plans lets you publish private repositories.

+- *repo* scope: Grants read/write access to code, commit statuses, invitations, collaborators, adding team memberships, and deployment statuses for public and private repositories and organizations. This is needed for all git network operations (push, pull, fetch), and for getting information about the repository you're currently working on.

+

+***Note:*** *Some older versions of the plugin ask for `gist` and `write:public_key`.*

+

+For more information on creating personal access tokens, see "[Creating a personal access token for the command line](https://help.github.com/articles/creating-a-personal-access-token-for-the-command-line).

+

+For more information on authenticating with SAML single sign-on, see "[About authentication with SAML single sign-on](https://help.github.com/articles/about-authentication-with-saml-single-sign-on)."

diff --git a/docs/using/getting-started.md b/docs/using/getting-started.md

index 11f2b008f..4c5892a96 100644

--- a/docs/using/getting-started.md

+++ b/docs/using/getting-started.md

@@ -19,8 +19,10 @@ And you should see the GitHub spinner:

- History: A history of commits with title, time stamp, and commit author

- Branches: A list of local and remote branches with the ability to create new branches, switch branches, or checkout remote branches

- Settings: your git configuration (pulled from your local git credentials if they have been previously set), your repository configuration (you can manually put the URL to any remote repository here instead of using the Publish button to publish to GitHub), a list of locked files, your git installation details, and general settings to help us better help you if you get stuck

-4. You can

-# Connecting to an Existing Repository

-

-# Connecting to an Existing Repository that already has the GitHub for Unity package

+# Cloning an Existing Repository

+GitHub for Unity does not have the functionality to clone projects (yet!).

+1. Clone the repository (either through command line or with GitHub Desktop https://desktop.github.com/).

+2. Open the project in Unity.

+3. Install GitHub for Unity if it is not already installed.

+4. The GitHub plugin should load with all functionality enabled.

\ No newline at end of file

diff --git a/docs/using/how-to-install-and-update.md b/docs/using/how-to-install-and-update.md

index 5f357cf79..fb9dd93d8 100644

--- a/docs/using/how-to-install-and-update.md

+++ b/docs/using/how-to-install-and-update.md

@@ -43,4 +43,9 @@ Once you've downloaded the package file, you can quickly install it within Unity

# Updating the GitHub for Unity Package

-_COMING SOON_

+

+- If you are running Unity and wish to update GitHub for Unity (unless explicitly stated), be sure that the files in `x64` and `x86` are not selected.

+

+

+

+- Otherwise, it's best to stop Unity and delete GitHub for Unity from your project. Startup Unity and run the package installer like normal. Allowing it to restore everything.

diff --git a/docs/using/images/branches-initial-view.png b/docs/using/images/branches-initial-view.png

new file mode 100644

index 000000000..811bcf724

--- /dev/null

+++ b/docs/using/images/branches-initial-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:558821e67ea26955434da0485785e9240b6c15c44a3790b642fdcd37382e56a2

+size 59798

diff --git a/docs/using/images/changes-view.png b/docs/using/images/changes-view.png

new file mode 100644

index 000000000..23000f8ef

--- /dev/null

+++ b/docs/using/images/changes-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:2ac4637a222a06e6ab57f23082b8e8b2f5cb9e35b9b99776c112ddcebb923dec

+size 61918

diff --git a/docs/using/images/confirm-pull-changes.png b/docs/using/images/confirm-pull-changes.png

new file mode 100644

index 000000000..722bbd639

--- /dev/null

+++ b/docs/using/images/confirm-pull-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:4b0e1aa1d8d86cb0264c9ca569a5c8e71b0aba73a2eb6611c06ec47670037d7d

+size 21478

diff --git a/docs/using/images/confirm-push-changes.png b/docs/using/images/confirm-push-changes.png

new file mode 100644

index 000000000..ff1ca1cd0

--- /dev/null

+++ b/docs/using/images/confirm-push-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:9e2e136027b03609f344bfde0c777d8e915544a5e9920f1268e4cc3abc5b1481

+size 18676

diff --git a/docs/using/images/confirm-revert.png b/docs/using/images/confirm-revert.png

new file mode 100644

index 000000000..cbf5d1566

--- /dev/null

+++ b/docs/using/images/confirm-revert.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:ba990ce12403bfb53fb387ca3f3e2c447b21814ed6cd7e6f88d968463a5253a8

+size 21973

diff --git a/docs/using/images/create-new-branch-view.png b/docs/using/images/create-new-branch-view.png

new file mode 100644

index 000000000..34c69d1ea

--- /dev/null

+++ b/docs/using/images/create-new-branch-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:f0239a51f611501dc63c828de206f35d412493871f65e712983666e67dbfc231

+size 62046

diff --git a/docs/using/images/delete-dialog.png b/docs/using/images/delete-dialog.png

new file mode 100644

index 000000000..574489f7c

--- /dev/null

+++ b/docs/using/images/delete-dialog.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:99d7cd8ef4957614cc844a08a632159157295414dc4675c1394f3209e30ffa58

+size 76315

diff --git a/docs/using/images/github-authenticate.png b/docs/using/images/github-authenticate.png

new file mode 100644

index 000000000..188121d97

--- /dev/null

+++ b/docs/using/images/github-authenticate.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:31ca0f7c4fc5c737a3db2da6eeb3deaa862d27e2cf3d1aa78fa14555b7cac750

+size 5927

diff --git a/docs/using/images/github-menu-item.png b/docs/using/images/github-menu-item.png

new file mode 100644

index 000000000..44f3f9458

--- /dev/null

+++ b/docs/using/images/github-menu-item.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c423766a70230c245f4bcdb1e33d9e76f267bf789876ecf9050016f9c8671735

+size 19651

diff --git a/docs/using/images/github-sign-in-button.png b/docs/using/images/github-sign-in-button.png

new file mode 100644

index 000000000..cb6132c24

--- /dev/null

+++ b/docs/using/images/github-sign-in-button.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:79bea09964bcf1964d4d1e1fb9712fe9fc67dc8ffe11f936534c9f2be63fd777

+size 9318

diff --git a/docs/using/images/github-two-factor.png b/docs/using/images/github-two-factor.png

new file mode 100644

index 000000000..7a3286616

--- /dev/null

+++ b/docs/using/images/github-two-factor.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:aba2174f780ea52a7cf7bbfd5144527bb7b29c3ce6a3200b6b6f8c69b2d6fb48

+size 9665

diff --git a/docs/using/images/locked-scene.png b/docs/using/images/locked-scene.png

new file mode 100644

index 000000000..7a839141d

--- /dev/null

+++ b/docs/using/images/locked-scene.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:bbc246b18cbd173c19f392fe7ab85bffb8a97a7616ef99e7e37496e307924e49

+size 51983

diff --git a/docs/using/images/locks-view-right-click.png b/docs/using/images/locks-view-right-click.png

new file mode 100644

index 000000000..0ee14312c

--- /dev/null

+++ b/docs/using/images/locks-view-right-click.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:590dae09b3c029e48c3703d6c85a6774b062ebea46f11c2c062d97fedcb436df

+size 27251

diff --git a/docs/using/images/locks-view.png b/docs/using/images/locks-view.png

new file mode 100644

index 000000000..e8790d74e

--- /dev/null

+++ b/docs/using/images/locks-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:118f2b2e1bb82b598107eaa73db9a077374ff4f9601e47677eca7296353b1bc3

+size 23274

diff --git a/docs/using/images/name-branch.png b/docs/using/images/name-branch.png

new file mode 100644

index 000000000..9926c06db

--- /dev/null

+++ b/docs/using/images/name-branch.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:08232fa5cfd41a41c8091197ac27f433fdfa865c4996388dd0c731ac75176442

+size 59599

diff --git a/docs/using/images/new-branch-created.png b/docs/using/images/new-branch-created.png

new file mode 100644

index 000000000..2a37a9aeb

--- /dev/null

+++ b/docs/using/images/new-branch-created.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:8543da247ee23364c15abec87e4e3de3e62b85a470e7d3d090ade18fb321072c

+size 64045

diff --git a/docs/using/images/post-commit-view.png b/docs/using/images/post-commit-view.png

new file mode 100644

index 000000000..81fc7d135

--- /dev/null

+++ b/docs/using/images/post-commit-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:91e26123236bdd3e692ca7d30ff0d6477311fe739d8008ba19fd543ee3506176

+size 21345

diff --git a/docs/using/images/post-push-history-view.png b/docs/using/images/post-push-history-view.png

new file mode 100644

index 000000000..0d2ed767a

--- /dev/null

+++ b/docs/using/images/post-push-history-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c0c2d5ccaddc88d3268e0d57f02229525240ecc71bded951e23171456560268b

+size 22485

diff --git a/docs/using/images/pull-view.png b/docs/using/images/pull-view.png

new file mode 100644

index 000000000..842ff9660

--- /dev/null

+++ b/docs/using/images/pull-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:feb83a46ebdd34ff46db3a38e8829f5adec5b10824bf69a8c56c9ba6fbd603ff

+size 25054

diff --git a/docs/using/images/push-view.png b/docs/using/images/push-view.png

new file mode 100644

index 000000000..42bef651a

--- /dev/null

+++ b/docs/using/images/push-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:ab87bae0916af3681b7528891a359c77597fa3ea57f13eb6924f1cbcf2f2ab7d

+size 14581

diff --git a/docs/using/images/release-lock.png b/docs/using/images/release-lock.png

new file mode 100644

index 000000000..545b81c8a

--- /dev/null

+++ b/docs/using/images/release-lock.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:0f6e05a714d270016d1f8ad4e018a895ed2b023fe59bd34a28df8d315c065649

+size 140913

diff --git a/docs/using/images/request-lock.png b/docs/using/images/request-lock.png

new file mode 100644

index 000000000..b877e1d8f

--- /dev/null

+++ b/docs/using/images/request-lock.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:3f25877141bed2dcbdc4324edcbd802b6f216771e80de704f20dc99a3fdc718b

+size 136078

diff --git a/docs/using/images/revert-commit.png b/docs/using/images/revert-commit.png

new file mode 100644

index 000000000..daad75c8c

--- /dev/null

+++ b/docs/using/images/revert-commit.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:b7711f428f3e04ea056c47b5d43109f3941761f4c286360d3616e0598d2d7b75

+size 26119

diff --git a/docs/using/images/revert.png b/docs/using/images/revert.png

new file mode 100644

index 000000000..326071a28

--- /dev/null

+++ b/docs/using/images/revert.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:dea7b36eec8e2d99e1906b6cbc9cd22f52d2db09cc1a0cf34563585720b6649d

+size 25141

diff --git a/docs/using/images/success-pull-changes.png b/docs/using/images/success-pull-changes.png

new file mode 100644

index 000000000..a246da405

--- /dev/null

+++ b/docs/using/images/success-pull-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:e0407ab83ca311f319ff7feac0dac84b862935e2b50353ff82c067bdadf80e16

+size 16888

diff --git a/docs/using/images/success-push-changes.png b/docs/using/images/success-push-changes.png

new file mode 100644

index 000000000..8fba1f506

--- /dev/null

+++ b/docs/using/images/success-push-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:cfd290cb1e52b0acb5503642a3aaef164bf343735729325484e25d8b99293d7e

+size 13262

diff --git a/docs/using/images/switch-confirmation.png b/docs/using/images/switch-confirmation.png

new file mode 100644

index 000000000..92d623d6c

--- /dev/null

+++ b/docs/using/images/switch-confirmation.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:4ed80f5cf2fab5fd256dd7cd093ad505c966edb95bdc5a15c3f1ace042bec7f6

+size 73947

diff --git a/docs/using/images/switch-or-delete.png b/docs/using/images/switch-or-delete.png

new file mode 100644

index 000000000..deab6badf

--- /dev/null

+++ b/docs/using/images/switch-or-delete.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:8c0d171b2e8f7e212f2446b416235c6232c57c9b4927cfa41891bffa1940ae9d

+size 73828

diff --git a/docs/using/images/switched-branches.png b/docs/using/images/switched-branches.png

new file mode 100644

index 000000000..c185d25c5

--- /dev/null

+++ b/docs/using/images/switched-branches.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:542806a8dcc012cdfadadaf9cdab3cf4871bbbbee2c8d73834946df4ed249aec

+size 64281

diff --git a/docs/using/locking-files.md b/docs/using/locking-files.md

new file mode 100644

index 000000000..f90c8e7a3

--- /dev/null

+++ b/docs/using/locking-files.md

@@ -0,0 +1,30 @@

+# Locking files

+

+## Request locks

+

+From the Project tab, right-click on a file to open the context menu and select `Request Lock`.

+

# Updating the GitHub for Unity Package

-_COMING SOON_

+

+- If you are running Unity and wish to update GitHub for Unity (unless explicitly stated), be sure that the files in `x64` and `x86` are not selected.

+

+

+

+- Otherwise, it's best to stop Unity and delete GitHub for Unity from your project. Startup Unity and run the package installer like normal. Allowing it to restore everything.

diff --git a/docs/using/images/branches-initial-view.png b/docs/using/images/branches-initial-view.png

new file mode 100644

index 000000000..811bcf724

--- /dev/null

+++ b/docs/using/images/branches-initial-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:558821e67ea26955434da0485785e9240b6c15c44a3790b642fdcd37382e56a2

+size 59798

diff --git a/docs/using/images/changes-view.png b/docs/using/images/changes-view.png

new file mode 100644

index 000000000..23000f8ef

--- /dev/null

+++ b/docs/using/images/changes-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:2ac4637a222a06e6ab57f23082b8e8b2f5cb9e35b9b99776c112ddcebb923dec

+size 61918

diff --git a/docs/using/images/confirm-pull-changes.png b/docs/using/images/confirm-pull-changes.png

new file mode 100644

index 000000000..722bbd639

--- /dev/null

+++ b/docs/using/images/confirm-pull-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:4b0e1aa1d8d86cb0264c9ca569a5c8e71b0aba73a2eb6611c06ec47670037d7d

+size 21478

diff --git a/docs/using/images/confirm-push-changes.png b/docs/using/images/confirm-push-changes.png

new file mode 100644

index 000000000..ff1ca1cd0

--- /dev/null

+++ b/docs/using/images/confirm-push-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:9e2e136027b03609f344bfde0c777d8e915544a5e9920f1268e4cc3abc5b1481

+size 18676

diff --git a/docs/using/images/confirm-revert.png b/docs/using/images/confirm-revert.png

new file mode 100644

index 000000000..cbf5d1566

--- /dev/null

+++ b/docs/using/images/confirm-revert.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:ba990ce12403bfb53fb387ca3f3e2c447b21814ed6cd7e6f88d968463a5253a8

+size 21973

diff --git a/docs/using/images/create-new-branch-view.png b/docs/using/images/create-new-branch-view.png

new file mode 100644

index 000000000..34c69d1ea

--- /dev/null

+++ b/docs/using/images/create-new-branch-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:f0239a51f611501dc63c828de206f35d412493871f65e712983666e67dbfc231

+size 62046

diff --git a/docs/using/images/delete-dialog.png b/docs/using/images/delete-dialog.png

new file mode 100644

index 000000000..574489f7c

--- /dev/null

+++ b/docs/using/images/delete-dialog.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:99d7cd8ef4957614cc844a08a632159157295414dc4675c1394f3209e30ffa58

+size 76315

diff --git a/docs/using/images/github-authenticate.png b/docs/using/images/github-authenticate.png

new file mode 100644

index 000000000..188121d97

--- /dev/null

+++ b/docs/using/images/github-authenticate.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:31ca0f7c4fc5c737a3db2da6eeb3deaa862d27e2cf3d1aa78fa14555b7cac750

+size 5927

diff --git a/docs/using/images/github-menu-item.png b/docs/using/images/github-menu-item.png

new file mode 100644

index 000000000..44f3f9458

--- /dev/null

+++ b/docs/using/images/github-menu-item.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c423766a70230c245f4bcdb1e33d9e76f267bf789876ecf9050016f9c8671735

+size 19651

diff --git a/docs/using/images/github-sign-in-button.png b/docs/using/images/github-sign-in-button.png

new file mode 100644

index 000000000..cb6132c24

--- /dev/null

+++ b/docs/using/images/github-sign-in-button.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:79bea09964bcf1964d4d1e1fb9712fe9fc67dc8ffe11f936534c9f2be63fd777

+size 9318

diff --git a/docs/using/images/github-two-factor.png b/docs/using/images/github-two-factor.png

new file mode 100644

index 000000000..7a3286616

--- /dev/null

+++ b/docs/using/images/github-two-factor.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:aba2174f780ea52a7cf7bbfd5144527bb7b29c3ce6a3200b6b6f8c69b2d6fb48

+size 9665

diff --git a/docs/using/images/locked-scene.png b/docs/using/images/locked-scene.png

new file mode 100644

index 000000000..7a839141d

--- /dev/null

+++ b/docs/using/images/locked-scene.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:bbc246b18cbd173c19f392fe7ab85bffb8a97a7616ef99e7e37496e307924e49

+size 51983

diff --git a/docs/using/images/locks-view-right-click.png b/docs/using/images/locks-view-right-click.png

new file mode 100644

index 000000000..0ee14312c

--- /dev/null

+++ b/docs/using/images/locks-view-right-click.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:590dae09b3c029e48c3703d6c85a6774b062ebea46f11c2c062d97fedcb436df

+size 27251

diff --git a/docs/using/images/locks-view.png b/docs/using/images/locks-view.png

new file mode 100644

index 000000000..e8790d74e

--- /dev/null

+++ b/docs/using/images/locks-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:118f2b2e1bb82b598107eaa73db9a077374ff4f9601e47677eca7296353b1bc3

+size 23274

diff --git a/docs/using/images/name-branch.png b/docs/using/images/name-branch.png

new file mode 100644

index 000000000..9926c06db

--- /dev/null

+++ b/docs/using/images/name-branch.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:08232fa5cfd41a41c8091197ac27f433fdfa865c4996388dd0c731ac75176442

+size 59599

diff --git a/docs/using/images/new-branch-created.png b/docs/using/images/new-branch-created.png

new file mode 100644

index 000000000..2a37a9aeb

--- /dev/null

+++ b/docs/using/images/new-branch-created.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:8543da247ee23364c15abec87e4e3de3e62b85a470e7d3d090ade18fb321072c

+size 64045

diff --git a/docs/using/images/post-commit-view.png b/docs/using/images/post-commit-view.png

new file mode 100644

index 000000000..81fc7d135

--- /dev/null

+++ b/docs/using/images/post-commit-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:91e26123236bdd3e692ca7d30ff0d6477311fe739d8008ba19fd543ee3506176

+size 21345

diff --git a/docs/using/images/post-push-history-view.png b/docs/using/images/post-push-history-view.png

new file mode 100644

index 000000000..0d2ed767a

--- /dev/null

+++ b/docs/using/images/post-push-history-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:c0c2d5ccaddc88d3268e0d57f02229525240ecc71bded951e23171456560268b

+size 22485

diff --git a/docs/using/images/pull-view.png b/docs/using/images/pull-view.png

new file mode 100644

index 000000000..842ff9660

--- /dev/null

+++ b/docs/using/images/pull-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:feb83a46ebdd34ff46db3a38e8829f5adec5b10824bf69a8c56c9ba6fbd603ff

+size 25054

diff --git a/docs/using/images/push-view.png b/docs/using/images/push-view.png

new file mode 100644

index 000000000..42bef651a

--- /dev/null

+++ b/docs/using/images/push-view.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:ab87bae0916af3681b7528891a359c77597fa3ea57f13eb6924f1cbcf2f2ab7d

+size 14581

diff --git a/docs/using/images/release-lock.png b/docs/using/images/release-lock.png

new file mode 100644

index 000000000..545b81c8a

--- /dev/null

+++ b/docs/using/images/release-lock.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:0f6e05a714d270016d1f8ad4e018a895ed2b023fe59bd34a28df8d315c065649

+size 140913

diff --git a/docs/using/images/request-lock.png b/docs/using/images/request-lock.png

new file mode 100644

index 000000000..b877e1d8f

--- /dev/null

+++ b/docs/using/images/request-lock.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:3f25877141bed2dcbdc4324edcbd802b6f216771e80de704f20dc99a3fdc718b

+size 136078

diff --git a/docs/using/images/revert-commit.png b/docs/using/images/revert-commit.png

new file mode 100644

index 000000000..daad75c8c

--- /dev/null

+++ b/docs/using/images/revert-commit.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:b7711f428f3e04ea056c47b5d43109f3941761f4c286360d3616e0598d2d7b75

+size 26119

diff --git a/docs/using/images/revert.png b/docs/using/images/revert.png

new file mode 100644

index 000000000..326071a28

--- /dev/null

+++ b/docs/using/images/revert.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:dea7b36eec8e2d99e1906b6cbc9cd22f52d2db09cc1a0cf34563585720b6649d

+size 25141

diff --git a/docs/using/images/success-pull-changes.png b/docs/using/images/success-pull-changes.png

new file mode 100644

index 000000000..a246da405

--- /dev/null

+++ b/docs/using/images/success-pull-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:e0407ab83ca311f319ff7feac0dac84b862935e2b50353ff82c067bdadf80e16

+size 16888

diff --git a/docs/using/images/success-push-changes.png b/docs/using/images/success-push-changes.png

new file mode 100644

index 000000000..8fba1f506

--- /dev/null

+++ b/docs/using/images/success-push-changes.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:cfd290cb1e52b0acb5503642a3aaef164bf343735729325484e25d8b99293d7e

+size 13262

diff --git a/docs/using/images/switch-confirmation.png b/docs/using/images/switch-confirmation.png

new file mode 100644

index 000000000..92d623d6c

--- /dev/null

+++ b/docs/using/images/switch-confirmation.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:4ed80f5cf2fab5fd256dd7cd093ad505c966edb95bdc5a15c3f1ace042bec7f6

+size 73947

diff --git a/docs/using/images/switch-or-delete.png b/docs/using/images/switch-or-delete.png

new file mode 100644

index 000000000..deab6badf

--- /dev/null

+++ b/docs/using/images/switch-or-delete.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:8c0d171b2e8f7e212f2446b416235c6232c57c9b4927cfa41891bffa1940ae9d

+size 73828

diff --git a/docs/using/images/switched-branches.png b/docs/using/images/switched-branches.png

new file mode 100644

index 000000000..c185d25c5

--- /dev/null

+++ b/docs/using/images/switched-branches.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:542806a8dcc012cdfadadaf9cdab3cf4871bbbbee2c8d73834946df4ed249aec

+size 64281

diff --git a/docs/using/locking-files.md b/docs/using/locking-files.md

new file mode 100644

index 000000000..f90c8e7a3

--- /dev/null

+++ b/docs/using/locking-files.md

@@ -0,0 +1,30 @@

+# Locking files

+

+## Request locks

+

+From the Project tab, right-click on a file to open the context menu and select `Request Lock`.

+ +

+An additional way to lock a file is by selecting it and going to `Assets` -> `Request Lock`.

+

+## View locks

+

+After requesting a lock, a lock icon appears in the bottom right-hand corner of the file.

+

+

+An additional way to lock a file is by selecting it and going to `Assets` -> `Request Lock`.

+

+## View locks

+

+After requesting a lock, a lock icon appears in the bottom right-hand corner of the file.

+ +

+A list of all locked files will appear in the **Locks** view in the GitHub tab.

+

+

+A list of all locked files will appear in the **Locks** view in the GitHub tab.

+ +

+## Release locks

+

+There are three ways to release locks:

+

+1. From the Project tab, right-click on the locked file to open the context menu and select the option to `Release Lock`.

+

+

+## Release locks

+

+There are three ways to release locks:

+

+1. From the Project tab, right-click on the locked file to open the context menu and select the option to `Release Lock`.

+ +

+2. From the GitHub tab under the **Locks** view, right-click to open the context menu and select to `Release Lock`.

+

+

+2. From the GitHub tab under the **Locks** view, right-click to open the context menu and select to `Release Lock`.

+ +

+3. Select the file to unlock and go to select the menu option `Assets` -> `Release Lock`.

+

+Note: There are also two options for how to release a lock on a file. Always choose the `Release Lock` option first. The `Release Lock (forced)` option can be used to remove someone else's lock.

diff --git a/docs/using/managing-branches.md b/docs/using/managing-branches.md

new file mode 100644

index 000000000..13fa9c1bd

--- /dev/null

+++ b/docs/using/managing-branches.md

@@ -0,0 +1,38 @@

+# Managing branches

+

+Initial **Branches** view

+

+

+

+3. Select the file to unlock and go to select the menu option `Assets` -> `Release Lock`.

+

+Note: There are also two options for how to release a lock on a file. Always choose the `Release Lock` option first. The `Release Lock (forced)` option can be used to remove someone else's lock.

diff --git a/docs/using/managing-branches.md b/docs/using/managing-branches.md

new file mode 100644

index 000000000..13fa9c1bd

--- /dev/null

+++ b/docs/using/managing-branches.md

@@ -0,0 +1,38 @@

+# Managing branches

+

+Initial **Branches** view

+

+ +

+## Create branch

+

+1. From the **Branches** view, click on `master` under local branches to enable the `New Branch` button and be able to create a new branch from master.

+2. Click on `New Branch`.

+

+

+## Create branch

+

+1. From the **Branches** view, click on `master` under local branches to enable the `New Branch` button and be able to create a new branch from master.

+2. Click on `New Branch`.

+ +

+3. Enter a name for the branch and click `Create`.

+

+

+3. Enter a name for the branch and click `Create`.

+ +

+4. The new branch will be created from master.

+

+

+4. The new branch will be created from master.

+ +

+## Checkout branch

+

+1. Right-click on a local branch and select `Switch` or double-click on the branch to switch to it.

+

+

+## Checkout branch

+

+1. Right-click on a local branch and select `Switch` or double-click on the branch to switch to it.

+ +

+2. A dialog will appear asking `Switch branch to 'branch name'?`. Select `Switch`.

+

+

+2. A dialog will appear asking `Switch branch to 'branch name'?`. Select `Switch`.

+ +

+The branch will be checked out.

+

+

+The branch will be checked out.

+ +

+## Delete branches

+

+1. Click on the branch name to be deleted and the `Delete` button becomes enabled.

+2. Right-click on a local branch and select `Delete` or click the `Delete` button above the Local branches list.

+

+

+## Delete branches

+

+1. Click on the branch name to be deleted and the `Delete` button becomes enabled.

+2. Right-click on a local branch and select `Delete` or click the `Delete` button above the Local branches list.

+ +

+3. A dialog appears asking `Are you sure you want to delete the branch: 'branch name'?`. Select `Delete`.

+

+The branch will be deleted.

diff --git a/docs/using/quick-guide.md b/docs/using/quick-guide.md

new file mode 100644

index 000000000..2011ee40f

--- /dev/null

+++ b/docs/using/quick-guide.md

@@ -0,0 +1,164 @@

+# Quick Guide

+

+## More resources

+

+These documents contain more details on how to use the GitHub for Unity plugin:

+- **[Installing and Updating the GitHub for Unity package](https://github.com/github-for-unity/Unity/blob/master/docs/using/how-to-install-and-update.md)**

+- **[Getting Started with the GitHub for Unity package](https://github.com/github-for-unity/Unity/blob/master/docs/using/getting-started.md)**

+- **[Authenticating to GitHub](https://github.com/github-for-unity/Unity/blob/master/docs/using/authenticating-to-github.md)**

+- **[Managing Branches](https://github.com/github-for-unity/Unity/blob/master/docs/using/managing-branches.md)**

+- **[Locking Files](https://github.com/github-for-unity/Unity/blob/master/docs/using/locking-files.md)**

+- **[Working with Changes](https://github.com/github-for-unity/Unity/blob/master/docs/using/working-with-changes.md)**

+- **[Using the Api](https://github.com/github-for-unity/Unity/blob/master/docs/using/using-the-api.md)**

+

+## Table of Contents

+

+[Installing GitHub for Unity](#installing-github-for-unity)

+

+- [Requirements](#requirements)

+ - [Git on macOS](#git-on-macos)

+ - [Git on Windows](#git-on-windows)

+- [Installation](#installation)

+- [Log files](#log-files)

+ - [Windows](#windows)

+ - [macOS](#macos)

+

+[Quick Guide to GitHub for Unity](#quick-guide-to-github-for-unity)

+

+- [Opening the GitHub window](#opening-the-github-window)

+- [Initialize Repository](#initialize-repository)

+- [Authentication](#authentication)

+- [Publish a new repository](#publish-a-new-repository)

+- [Commiting your work - Changes tab](#commiting-your-work---changes-tab)

+- [Pushing/pulling your work - History tab](#pushingpulling-your-work---history-tab)

+- [Branches tab](#branches-tab)

+- [Settings tab](#settings-tab)

+

+## Installing GitHub for Unity

+

+### Requirements

+

+- Unity 5.4 or higher

+ - There's currently a blocker issue opened for 5.3 support, so we know it doesn't run there. Personal edition is fine.

+- Git and Git LFS 2.x

+

+#### Git on macOS

+

+The current release has limited macOS support. macOS users will need to install the latest [Git](https://git-scm.com/downloads) and [Git LFS](https://git-lfs.github.com/) manually, and make sure these are on the path. You can configure the Git location in the `Settings` tab on the GitHub window.

+

+The easiest way of installing git and git lfs is to install [Homebrew](https://brew.sh/) and then do `brew install git git-lfs`.

+

+Make sure a Git user and email address are set in the `~/.gitconfig` file before you initialize a repository for the first time. You can set these values by opening your `~/.gitconfig` file and adding the following section, if it doesn't exist yet:

+

+```

+[user]

+ name = Your Name

+ email = Your Email

+```

+

+#### Git on Windows

+

+The GitHub for Unity extension ships with a bundle of Git and Git LFS, to ensure that you have the correct version. These will be installed into `%LOCALAPPDATA%\GitHubUnity` when the extension runs for the first time.

+

+Make sure a Git user and email address are set in the `%HOME%\.gitconfig` file before you initialize a repository for the first time. You can set these values by opening your `%HOME%\.gitconfig` file and adding the following section, if it doesn't exist yet:

+

+```

+[user]

+ name = Your Name

+ email = Your Email

+```

+